Ken's Sandbox

Asset Creation Evolved…Playing in the Deep Learning Sandbox

I believe the Kaggle world record is around 97% accuracy at identifying which class an image belongs to over a 10,000 image long test. Humans score about 94% accuracy at identifying each class an image belongs to. I was quite happy with the 93.20% accuracy I eventually got tuning my CNN and playing with different configurations and layer depths. It was a very interesting process to experiment with tuning models to learn very quickly but top out at say 80% accuracy, and make models that learned far slower and took much longer to train but could break 90% accuracy.

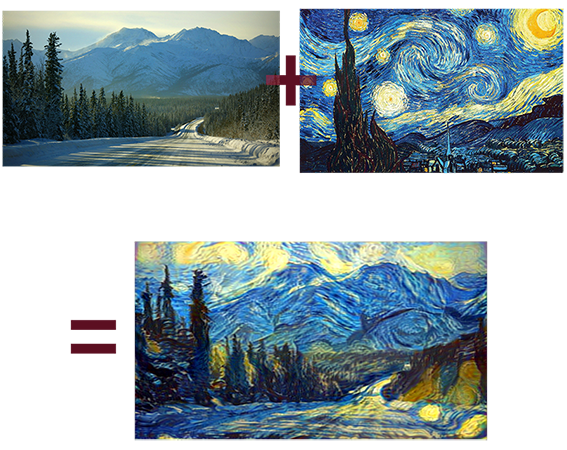

My real interest in Machine Learning is how it can be applied to visual problems, and tools that learn from the user. Perhaps one day anticipating what the user wants to do. When the paper "A Neural Algorithm of Artistic Style" came out I was very interested in the possibilities of quantifying an artistic style. Moving my training from the CPU to the GPU with Theano, CUDA 5.1 and a GTX 1060, and a snippet of the VGG16 dataset I hacked together my own version of the very popular "Prisma" app running on my old Dell. The neatest thing about what I had was that the code could take in any style image I gave it and attempt to transfer it. With the Prisma app you are locked into to a small pre-trained set of styles of their choosing. I need to get back to this area as I have seen improvements on the web that I would love to incorporate for better more consistent results than what I was getting last summer. The two initial uses I had for this technology was to learn our concept artists style and be able to transfer it to images from the web, generating my own concept art without the wait. He is a busy guy. The secondly use was for Look Dev. My idea here was to take an art style we liked, and a "White box" version of a level and envision what a game world might look like in a few seconds in that style instead of having artists spend days potentially "arting-up" a level. This seemed like a very good cheap litmus test to see if a style was worth further investigation.

For my future ML work, beyond making my Prisma hack more robust, I hope to explore the "enhancement" algorithms that are starting to pop up that have been used to upres textures and explore applying style transfer to textures, and then dealing with the caveats of UV islands and tiling. I have seen companies like Artomatix having similar ideas which is exciting and validating to see.

Feb 2015

Jan 2015

Sep 2014